Large Language Model Inference Framework Throughput Comparison: VLLM | SGLang | LMDeploy

Contents

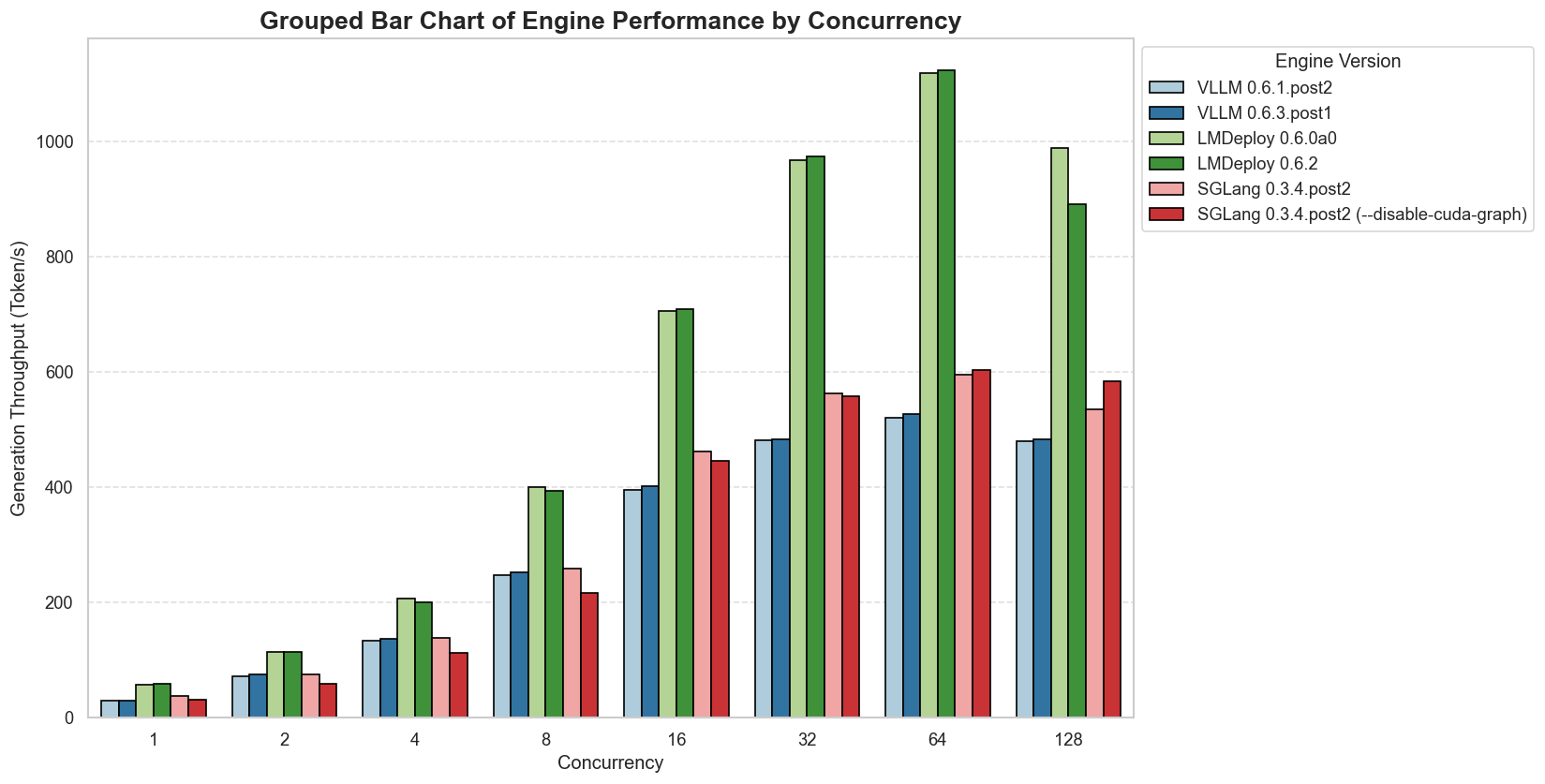

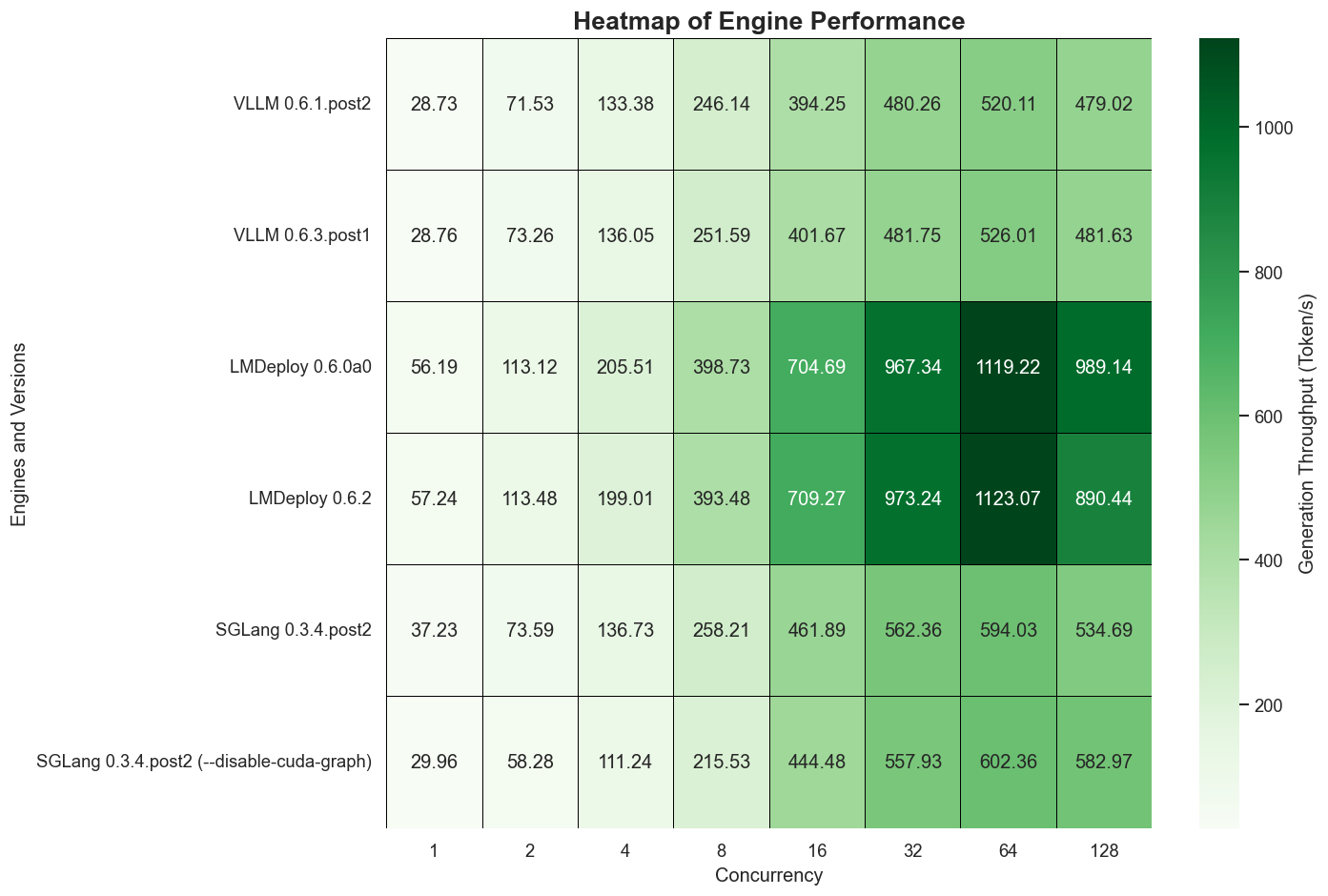

This article compares the throughput of three large language model inference engines, VLLM, SGLang, and LMDeploy, in a short-input, long-output scenario. The unit of measurement is output tokens per second.

A simple comparison of the throughput of three large language model inference engines, measured in output tokens per second, in a short-input, long-output scenario. See the table below for other parameters.

VLLM | SGLang | LMDeploy

| Concurrency | VLLM 0.6.1.post2 | VLLM 0.6.3.post1 | LMDeploy 0.6.0a0 | LMDeploy 0.6.2 | SGLang 0.3.4.post2 | SGLang 0.3.4.post2(–disable-cuda-graph) |

|---|---|---|---|---|---|---|

| 1 | 28.73 | 28.76 | 56.19 | 57.24 | 37.23 | 29.96 |

| 2 | 71.53 | 73.26 | 113.12 | 113.48 | 73.59 | 58.28 |

| 4 | 133.38 | 136.05 | 205.51 | 199.01 | 136.73 | 111.24 |

| 8 | 246.14 | 251.59 | 398.73 | 393.48 | 258.21 | 215.53 |

| 16 | 394.25 | 401.67 | 704.69 | 709.27 | 461.89 | 444.48 |

| 32 | 480.26 | 481.75 | 967.34 | 973.24 | 562.36 | 557.93 |

| 64 | 520.11 | 526.01 | 1119.22 | 1123.07 | 594.03 | 602.36 |

| 128 | 479.02 | 481.63 | 989.14 | 890.44 | 534.69 | 582.97 |

- Test Model: Qwen2.5-14B-Instruct-AWQ

- Hardware: E5 2680v4 + 2080ti 22G * 1