Is Flash Attention 2 a Significant Improvement? Not Necessarily

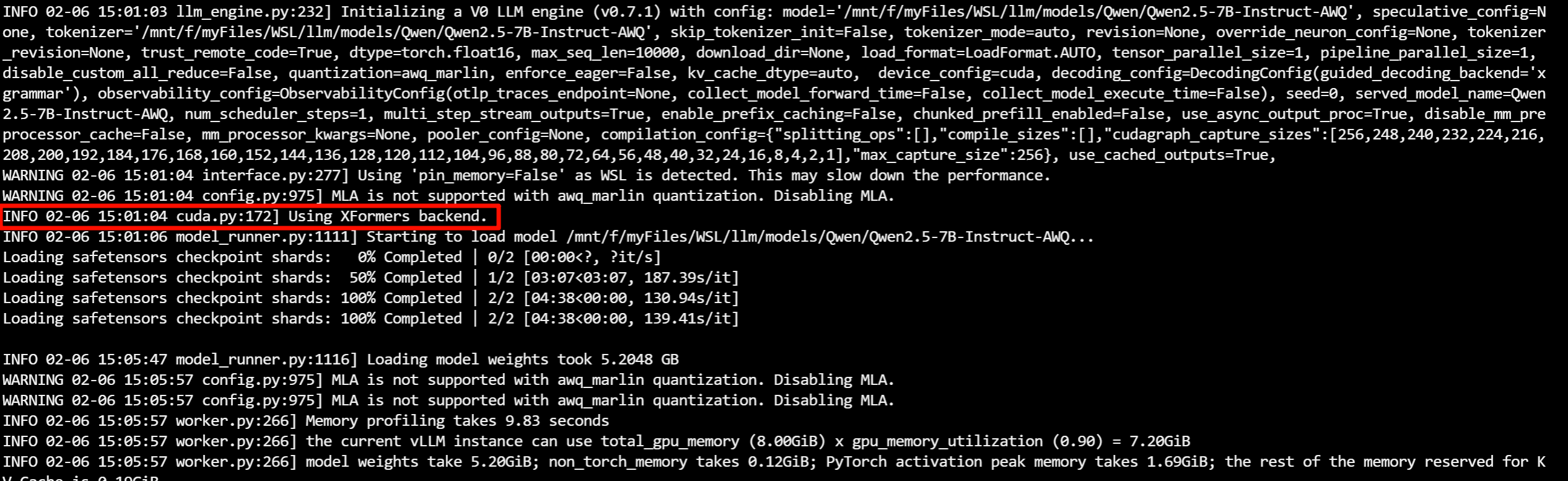

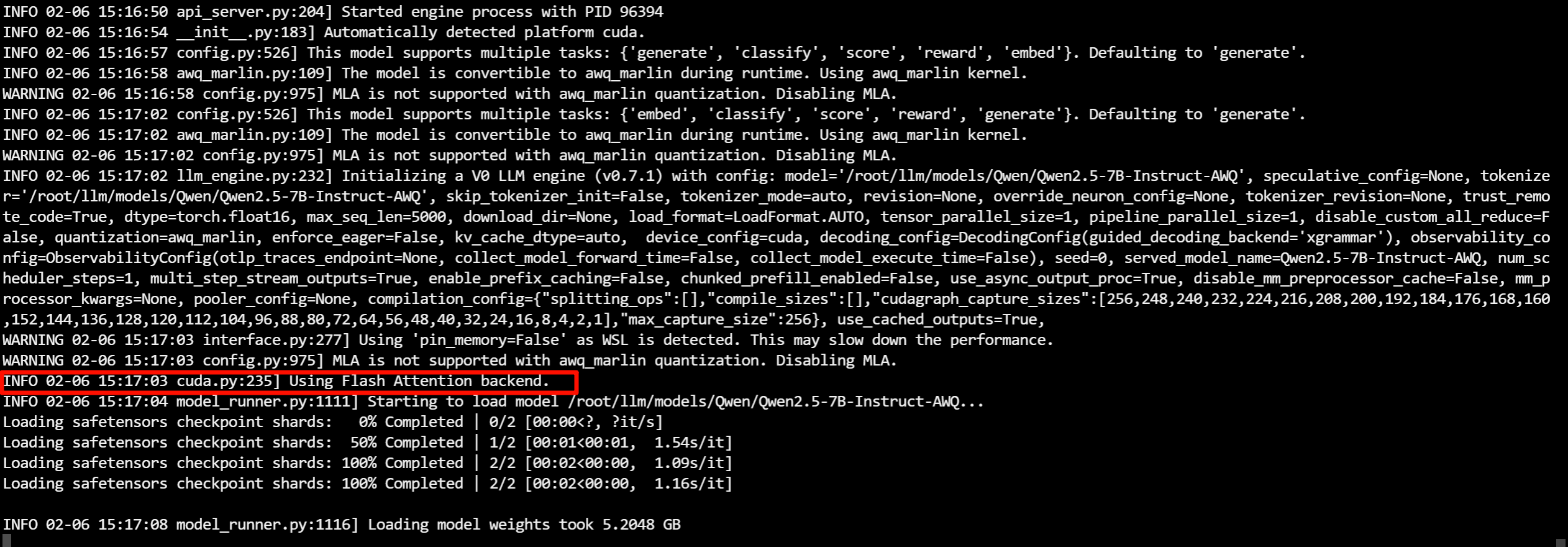

This article tests the performance difference of vllm when using xformers and flash attention 2 as backend attention mechanisms, respectively.

Errata

After subsequent tests, it was found that even when using the VLLM_ATTENTION_BACKEND=XFORMERS environment variable to start the vllm inference API service on the 30 series, and even though XFORMERS is displayed in the startup settings, the actual attention backend seems to undergo some kind of acceleration (most likely FLASH_ATTN2). Therefore, a retest was conducted, and it was found that flash attention 2 does indeed provide a good improvement. Please see the new article for detailed results.

The following is the original content of this article, which has not been modified

Introduction

The reason for writing this article is that I haven’t seen any quantitative analysis of the performance difference between inference frameworks using xformers and flash attention 2. All the information you can find says that fa2 is really great, reducing memory usage by xx% and speeding up attention calculation by xx%. None of the tests truly make a fair comparison of the performance of inference engines using these two attention calculations (e.g., vllm). My main device is a 2080ti (environmentalist~~, definitely not because I’m poor~~), which was already on the list of fa2 supported devices a long time ago (more than a year), but with the release of the rtx50 series, it is estimated that it will never be supported.

I especially want to know how much potential improvement I’m losing because I’m using such an old device, so I simply tested whether this thing really works in a real llm inference environment.

Beforehand, I would like to declare that the test results are just the real results on my machine, and there may be limitations. If you have different opinions or insights, please contact me.

Test environment

Hardware environment

# nvidia-smi

Thu Feb 6 15:37:54 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 565.65 Driver Version: 566.07 CUDA Version: 12.7 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 3070 ... On | 00000000:01:00.0 On | N/A |

| N/A 49C P8 14W / 95W | 672MiB / 8192MiB | 27% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

Software environment

# pip show vllm

Name: vllm

Version: 0.7.1

Summary: A high-throughput and memory-efficient inference and serving engine for LLMs

Home-page: https://github.com/vllm-project/vllm

Author: vLLM Team

Author-email:

License: Apache 2.0

Location: /root/miniconda3/envs/vllm/lib/python3.11/site-packages

Requires: aiohttp, blake3, cloudpickle, compressed-tensors, depyf, einops, fastapi, filelock, gguf, importlib_metadata, lark, lm-format-enforcer, mistral_common, msgspec, numpy, nvidia-ml-py, openai, outlines, partial-json-parser, pillow, prometheus-fastapi-instrumentator, prometheus_client, protobuf, psutil, py-cpuinfo, pydantic, pyyaml, pyzmq, ray, requests, sentencepiece, tiktoken, tokenizers, torch, torchaudio, torchvision, tqdm, transformers, typing_extensions, uvicorn, xformers, xgrammar

Required-by:

Testing tool

https://github.com/Yoosu-L/llmapibenchmark

Test Results

XFORMERS

# Run vllm with XFORMERS attention backend

export VLLM_ATTENTION_BACKEND=XFORMERS

python -m vllm.entrypoints.openai.api_server --model=/root/llm/models/Qwen/Qwen2.5-7B-Instruct-AWQ --served-model=Qwen2.5-7B-Instruct-AWQ --dtype=float16 --tensor-parallel-size=1 --trust-remote-code --host=0.0.0.0 --port=8008 --gpu-memory-utilization=0.9 --max-model-len=5000

short input

Input Tokens: 45

Output Tokens: 512

Test Model: Qwen2.5-7B-Instruct-AWQ

Latency: 2.20 ms

| Concurrency | Generation Throughput (tokens/s) | Prompt Throughput (tokens/s) | Min TTFT (s) | Max TTFT (s) |

|---|---|---|---|---|

| 1 | 58.49 | 846.81 | 0.05 | 0.05 |

| 2 | 114.09 | 989.94 | 0.08 | 0.09 |

| 4 | 222.62 | 1193.99 | 0.11 | 0.15 |

| 8 | 414.35 | 1479.76 | 0.11 | 0.24 |

| 16 | 752.26 | 1543.29 | 0.13 | 0.47 |

| 32 | 653.94 | 1625.07 | 0.14 | 0.89 |

long input

Input Tokens: 2771

Output Tokens: 512

Test Model: Qwen2.5-7B-Instruct-AWQ

Latency: 3.60 ms

| Concurrency | Generation Throughput (tokens/s) | Prompt Throughput (tokens/s) | Min TTFT (s) | Max TTFT (s) |

|---|---|---|---|---|

| 1 | 45.64 | 1767.91 | 1.62 | 1.62 |

| 2 | 77.52 | 1743.44 | 1.67 | 3.28 |

| 4 | 71.76 | 1763.34 | 1.69 | 6.48 |

FLASH_ATTN

# Run vllm with FLASH_ATTN attention backend

export VLLM_ATTENTION_BACKEND=FLASH_ATTN

python -m vllm.entrypoints.openai.api_server --model=/root/llm/models/Qwen/Qwen2.5-7B-Instruct-AWQ --served-model=Qwen2.5-7B-Instruct-AWQ --dtype=float16 --tensor-parallel-size=1 --trust-remote-code --host=0.0.0.0 --port=8008 --gpu-memory-utilization=0.9 --max-model-len=5000

short input

Input Tokens: 45

Output Tokens: 512

Test Model: Qwen2.5-7B-Instruct-AWQ

Latency: 3.00 ms

| Concurrency | Generation Throughput (tokens/s) | Prompt Throughput (tokens/s) | Min TTFT (s) | Max TTFT (s) |

|---|---|---|---|---|

| 1 | 60.04 | 648.85 | 0.07 | 0.07 |

| 2 | 118.09 | 804.13 | 0.09 | 0.11 |

| 4 | 229.75 | 1030.40 | 0.13 | 0.17 |

| 8 | 431.84 | 1384.16 | 0.13 | 0.26 |

| 16 | 730.86 | 1538.19 | 0.13 | 0.47 |

| 32 | 692.52 | 1609.80 | 0.14 | 0.89 |

long input

Input Tokens: 2796

Output Tokens: 512

Test Model: Qwen2.5-7B-Instruct-AWQ

Latency: 3.20 ms

| Concurrency | Generation Throughput (tokens/s) | Prompt Throughput (tokens/s) | Min TTFT (s) | Max TTFT (s) |

|---|---|---|---|---|

| 1 | 44.18 | 1679.05 | 1.69 | 1.69 |

| 2 | 78.83 | 1654.61 | 1.74 | 3.41 |

| 4 | 75.70 | 1636.30 | 1.74 | 6.91 |

Conclusion

Seeing this result is not surprising, because based on the hardware specifications, the number of model parameters, and the inference speed data released by others using H100 rtx4090, I had a hunch that this would be the case.

Regardless of the prompt processing speed or generation speed, the improvement is minimal, and even the memory usage is the same, with almost full GPU memory occupancy after batch size>16. We cannot conclude that fa2 is really useless, maybe I got some parameters wrong, or maybe it just doesn’t work on my 30 series graphics card (but it does support fa2).

This test is meaningless for most people. You just need to choose a better graphics card. New features will always be supported by someone. Only a few people really care about whether these new features are useful. But I hope I’m wrong somewhere, after all, old graphics cards always need to be retired, and who doesn’t want the new ones to be better.